http://www.meetup.com/Nelson-SQL-Server-User-Group/events/228632044/

Author Archives: Rob

SQL Saturday Sydney

SQL Saturday Sydney is on 27th February and includes….ME! And some good speakers.

If you are going to be in the Sydney area I strongly recommend that you come along for a great day of free SQL content from some fantastic International speakers.

http://www.sqlsaturday.com/468/eventhome.aspx

I’ll be doing a presentation on High Availability and disaster recovery, talking about the various options that SQL Server offers out of the box to provide them, and some lessons learned from the Christchurch Earthquakes.

Hope to see you there.

Should I just Ignore CXPacket Waits

The CXPacket Wait is one of the most misunderstood and worst explained wait types I’ve come across. It’s not so much that people don’t know what it is, but that people don’t know when they need to do something about it. There’s a lot of old posts telling you to set maximum Degree of parallelism to 1 and the wait type goes away(Which is true, but not the best advise), then there’s a lot of newer posts that tell you that’s a bad idea as it stops all parallel execution of queries, but don’t explain when we should and shouldn’t be worries about it.

So What is the CXPacket Wait Type?

Let’s start with a recap. A CX Packet is a “Class Exchange” Packet, and the old entries in Books Online tell us we “You may consider lowering the degree of parallelism if contention on this wait type becomes a problem”. There’s a large part of that statement that hangs on the ‘If contention on this wait type’ part of the statement and a lot of unanswered questions. How do I know if I have contention on this wait type? What should I do if I do?

What’s actually going on here?

When a query goes is able to go parallel(That is it has a cost greater than the cost threshold of parallelism setting, contains operators that would benefit from parallelism, and the maximum degree of parallelism setting not 1), it can be broken up into a group of threads to perform the tasks needed to complete the query. It also requires a thread dedicated to look after all the other threads, co-ordinate and collate their efforts. Brent Ozar explains this like a teacher and a class of students. Some tasks are so simple that either the teacher or any of the students could do them quickly alone, while others will complete faster if the whole class works on them with the teacher ensuring that everyone is ‘on task’. CXPacket waits occur when threads finish at different times and the query is waiting for the remainder of threads to finish. They also accumulate while the ‘teacher’ thread is waiting.

So it’s not really a bad thing in itself, hence the number of newer posts about it which are absolutely against the ‘Set max Degree of Parallelism to 1’ solution. I agree. On modern servers with dozens of cores it seems ludicrous that you would enable a server wide setting to that limits the use of those cores and effectively cripples the performance of queries that were executing fine in parallel. Which bring me back to the subject of the post…

Should I just Ignore CXPacket Waits?

I’ve seen the odd suggestion to just ignore it, or add it to the benign wait type list in whatever query or tool you use to monitor your waits. Most of the time you can, except then when it is a real problem you can’t. So what should you do?

I think the change in the Books Online entry for CXPacket waits gives us the best clue. In 2008 R2 and earlier it says this: “Occurs when trying to synchronize the query processor exchange iterator. You may consider lowering the degree of parallelism if contention on this wait type becomes a problem.” In 2012 and 2014 it says this: “Occurs with parallel query plans when trying to synchronize the query processor exchange iterator. If waiting is excessive and cannot be reduced by tuning the query (such as adding indexes), consider adjusting the cost threshold for parallelism or lowering the degree of parallelism.” In other words you probably want to take this from a server level problem to an individual query problem.

Look at the queries that are generating high CXPacket waits. You’ll need to query out of the plan cache to identify them and grab the top 2 or 3 offenders. Take those over to your test system and verify you see the same behavior. Now test run-time with Max Degree of Parallelism set as per production and then set as 1. What you are most likely to see is that the query performs better with Parallelism enabled. This doesn’t prove CXPackets are not an issue, but does show how bad a solution setting Max Degree of Parallelism is. Now look at your other wait types – these are likely the true source of your issue. You are likely to see scheduler yields or IO Pagelatches which will indicate benefits can be gained from setting the right indexes. You may see the query run faster on test than in production – this can indicate that on production there is contention on another resource.

Once you’ve tuned the heck out of the top few offenders take them back to prod and you should see a reduction in CXPacket. You can repeat that exercise from time to time, particular the comparing run-times to see how much difference the Parallelism makes.

Some Considerations

- If you are using Max Degree of Parallelism at one you can still see CXPacket waits. Developers or DBA can fine-tune queries using the MAXDOP hint to bypass the server wide Max DoP settings.

- Some Applications(Ahem…Microsoft Dynamics AX, Microsoft Sharepoint….) have a vendor recommendation to disable server wide parallelism. See the above point on how to stay supported but also increase large query speed.

- Look at your plan cache as a whole and work out what constitutes a big query and a little one in terms of query cost. You can use Cost Threshold for Parallelism appropriately to dictate which queries go Parallel and which ones don’t.

- Don’t be hamstrung trying to find ‘best practice’. Don’t take any advise you find on the internet as gospel. Your production system is different from everyone elses, and you may need to do some experimentation to find the best setting for you.

And finally, a word of warning. Don’t write a blog post on Parallelism if you can’t spell Parallelism. I think I spelled it wrong about 20 times above:) This should probably expand to a lengthy client discussion on Parallelism, it’s hard enough to spell, you should try saying repeatedly in a conference call!

Backup Questions to ask: Part Two – Retention

In my previous post on backups I talked about division of responsibility and documenting your process. In this post I wanted to talk about backup retention, how to decide what that retention should be, and how to get the most millage out of your local disks.

I’m going to be talking about a simple backup process involving the DBA taking care of native SQL backups to disk, and the system administrator taking those flat file backups and moving them to long term storage. I defined it in my previous post like this:

I try and simplify backups to something approximating the below:

1. The DBA takes the native SQL Server backups to a local disk. These backups are retained for X days.

2.The system administrator is responsible for taking a copy of the native SQL backups from the local drive and copying them to long term storage, where they are retained for X2 days\month\years.

Once you have agreed the above process it should be a relatively simple task of working out what is X and what is X2 and then putting it in place. You’ll notice the most technical I have got to this point is referring to ‘native SQL backups’ and ‘long term storage’. I haven’t talked about full backups, differentials or log backups. I haven’t spoken about high availability or disaster recovery. The reason for this is right now this is not a technical discussion, it’s still a business one. It’s really easy for us as DBA’s to jump in and put in place a standardized recovery process, and in most cases that’s good enough. But actually what we need to do first is understand what the business needs for retention.

X and X2 are going to define your Recovery Point Objective – the point in time you could recovery to. In other words how much data could you potentially lose if you restore to a certain point in time. This is important, but it’s also only part of the picture.

A consideration that is regularly overlooked is the Recovery Time Objective – or how long can an application be offline. This is critical and something you simply don’t know unless you perform semi-regular restore tests. The reason I say that is that if you have an RTO of 1 hour, and your full backup takes two hours to restore – you will never be able to meet that target with backups alone. You simply can’t make restores run faster after a certain point. If you have an RTO that is less than the time it takes to recovery your backups, then you need to provide that availability another way – and that’s where the discussion of high availability needs to begin. We’ll dig more into that in a future post.

Assuming that you can restore within the RTO in all your known scenario’s then the only question is developing a backup strategy to meet your RPO. The starting point for a lot of DBA’s is a nightly full backup and 5,10,15 or 60 minute transaction log backups. This meets the most common restore scenario I have seen(most restores are within the past 24 hours in my experience). But it also plays havoc with the amount of data you are moving to long term storage.

I’ve recently been experimenting with much longer recovery chains. A monthly full backup, weekly differentials and log backups every 15 minutes has proven to be a good starting point in a lot of the bigger databases we support. These databases are often 95% or more data that never gets updated, and the updates are quite small and not that heavy. In one case we moved from a 254GB nightly backup to this model and the total combined size of a months differential and log backups was 23GB. That allowed us a massive saving of disk space with much faster restores to any point in time in the last month.

My point is it’s not 2000 any more, the GUI allows us to create restore scripts so we are not relying on manually crafting restore processes with 500 log backups – SSMS spits the code out for us. It’s as much work for you to hit F5 whether it’s restoring 10 log backups or 200.

The other approach to this is breaking archived data into partitions or using file groups so that you are only ever taking backups of the data that is actually changing. SQL Servers backup command is incredibly flexible so get to know your data and design a plan that gives you the best bang for buck rather than persisting with a generic plan on all your databases.

AX Cannot Create Table in TempDB error – Resolved

We’ve been migrating a client to new AX servers and we hit an error that I’ve seen a few times now where AX attempts to create a table in the tempdb database and is denied permissions.

Object Server 01: The database reported (session 14 (user)): [Microsoft][SQL Server Native Client 10.0][SQL Server]CREATE TABLE permission denied in database ‘tempdb’.

This error is due to AX’s method of using persistent ‘temp’ tables in the tempdb database(The same design decision that makes high availability for AX such a chore), and is not difficult to resolve.

To understand the problem you need to know a little about the way TempDB works. It’s designed as a container for temporary objects and overflow operations, and to ensure it is tidied up it is recreated each time a SQL Server is started. This means that as well as all tables in the database being removed, that all user permissions granted on the table are also wiped. AX uses this database in a slightly non-standard way, creating a group of tables on connection and persisting them until reboot. If these tables are removed(for instance by a FCI or AOAG failover) a number of errors of the ‘table not found’ variety will occur resulting in an AOS reboot being required. Additionally, when SQL Server restarts(or fails over) the rights on tempdb need to be reapplied so when the AOS reconnects it can recreate all it’s temporary tables. So having that background:

Solution Number One – Stored Procedure in Master Database

First identify the account which requires the permissions to tempdb in the first place. This should be the service account running the AOS. Then create a stored procedure in the master database such as the below:

CREATE procedure [dbo].[CREATETEMPDBPERMISSIONS_tempdb] as

begin

exec (‘USE tempdb; declare @dbaccesscount int; exec sp_grantlogin ”mydomain\myAOSserviceaccount”; select @dbaccesscount = COUNT(*) from master..syslogins where name = ”mydomain\myAOSserviceaccount”; if (@dbaccesscount <> 0) exec sp_grantdbaccess ”mydomain\myAOSserviceaccount”; ALTER USER [mydomain\myAOSserviceaccount] WITH DEFAULT_SCHEMA=dbo; exec sp_addrolemember ”db_ddladmin”, ”mydomain\myAOSserviceaccount”; exec sp_addrolemember ”db_datareader”, ”mydomain\myAOSserviceaccount”; exec sp_addrolemember ”db_datawriter”, ”mydomain\myAOSserviceaccount”;’)

end

EXEC sp_procoption N'[dbo].[CREATETEMPDBPERMISSIONS_tempdb]’, ‘startup’, ‘1’

This will fire whenever SQL Server starts and create the user and assign the permissions required. Obviously replace mydomain\myAOSserviceaccount with the account used to run your AOS.

Solution Two – The Sledgehammer

There’s various reasons where the above won’t be ideal. Multiple AOS service accounts, policy disallowing user stored procedures in system databases etc. You can achieve the same result by granting the login(s) that require access to the model database. This should then pass through to the tempdb database when the server is restarted.

Solution Three – The Non-DBA approach

I’m a database guy and not an AX guy, so I tend to look for database resolutions to problems. I believe – but have not had confirmed, that a full database sync will also resolve this issue. I haven’t seen this sorted from the application side but have read it in a few places now. And if it’s on the internet it must be true.

Backup Questions to ask – Part One: Division of Responsibility

I’ve written a bit about various backup products for SQL, but I wanted to extend on that a little bit to focus on some of the specifics. In this post we will be talking about the division in responsibility for backups and restores between the DBA and the system administrators.

These two people are often the same, but in this post I will treat them as if they are two different people. At a minimum it is two different functions of their job, and I’m a believer that if you have to wear many hats you yourself should act as though you have two jobs. That way when your boss comes along and offers you another employee to help you out, you can just hand over one of your hats like it’s a pre-packaged job.

Despite the improvement of specialist products for taking SQL backups, I still have a strong preference that native SQL Server backups are used. If pushed I will say – the best backup solution is probably the one which the person doing the restore is most familiar with, which is a slightly different proposition, but I’m going to assume the person responsible for the backups should be the DBA, because they are the one most likely to be in the firing line if the database can’t be restored.

I try and simplify backups to something approximating the below:

- The DBA takes native SQL Server backups to a local disk. These backups are retained for X days.

- The system administrator is responsible for taking a copy of the native SQL backups from the local drive and copying them to long term storage, where they are retained for X2 days\months\years.

It then follows that if a restore is needed to a database server within X, then the DBA can do that directly from disk. If a restore point is between X and X2, the system administrator can restore the backup from long term storage back to the local drive, where the DBA can proceed with the install.

Then all that is required is defining X and X2, and scheduling in occasional test restores to random dates to prove the whole system works.

Now most people reading this will (I hope) be waiting for something interesting in all this. It’s an incredibly simple setup, very clear who is responsible for what and if a backup can ever not be restored – it should be very clear as to who has dropped the ball.

But here’s the thing, in my role I get to see an insight into multiple different SQL environments, and it seems even this very simple process can get horribly tangled up resulting in a whole lot of confusion, finger pointing, and ultimately data loss. The situation is complicated enough when one person is responsible for both jobs. WHen it’s two people it becomes a little murkier, and when it’s multiple people across multiple business units then it quickly sinks to a level where it’s really easy to find you don’t have a backup right at the most critical point – when you need a restore. Add in 1 or more external vendors and things can quickly spiral out of control.

Do you know your restore processes? If I was to ask for a restore of a given database to a given date could you tell me?

- Who I should actually be asking?

- What is the longest ago I can restore to?

- What points in time can I restore to? Real time? Nightly backups? Monthly archives?

- Who needs to authorize a production restore?

- Who needs to perform a production restore?

If all of those questions are not immediately clear, then I’m going to have to raise the D word – Documentation. Someone needs to take responsibility for documenting the backup and restore processes. They need to interview all the people we think are involved in the process and get a really clear understanding of what they are doing, what they think other people are doing and then where there are any mismatches.

As a job – it sucks, but it can be quite enlightening. And it’s better doing a job that sucks that averts a disaster, than not doing the job that sucks, and finding out it sucks not to have a job.

Always On Availability Group Secondary Becomes Unreadable

An interesting issue occurred in a client environment today. The configuration was an Always On Availability Group which had a primary replica(Also a SQL cluster), a reporting replica(non-voting) and a DR replica in a different data center. There was a cluster issue the previous night which saw the report server removed from the cluster. The cluster and the availability group both recovered within 60 seconds. However one of the databases in the AOAG could not be queried from. The Availability group confirmed the database was healthy and data was transferring to it fine, but any select query just ran forever. I ran through a list of checks, scanned the error logs and then went through a number of fruitless steps to get things running again:

- Consistency check on the database was clean.

- Database exhibited the same behaviour when removed from and then returned to the availability group.

- Database exhibited the same behaviour when the replica was removed from and added back into the availability group(Not that I expected any different after the previous test, but if we didn’t try things we thought wouldn’t work we’d never solve half our IT problems).

- I even transferred the DR server to readable state and confirmed the same behaviour existed there.

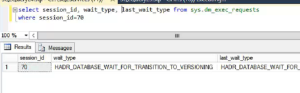

That all amounted to quite a bit of wasted time trying to find a quick fix, rather than really understanding the issue. So I checked exactly what the select thought it was waiting on:

The wait type HADR_DATABASE_WAIT_FOR_TRANSITION_TO_VERSIONING is not all that well documented but I got enough information from this post and especially this one too to see where the issue lay.

There was an open transaction on the production database. Until that was either committed or rolled back the secondary replica cannot use snapshot isolation transaction level, and therefore will not return a response(either error or result set) from the SELECT statement. We tried to stop the transaction from the application side, but ultimately ended up issuing a KILL command from within SQL.