Because I work in IT I quite regularly get asked to do any number of things that I have never done before on the basis that it involves computers(or increasingly phones) or the internet, or something….geeky. The idea is that because we work in one area of IT, our skills should neatly transfer to other areas.

This is an annoyance in your personal life. Of course I have no experience in how to set up photo sync on your iphone, I have an android, but actually, I can probably figure out how it’s done faster than the average user. Hook up your new playstation and transfer your accounts? I mean…sure…I don’t have an xbox, but I can read things on a screen and guess which button to press. But that’s quite low stakes stuff. The workplace equivalent is when a sysadmin or a web developer is expected to check in on the databases. In the former case they are probably looking at the database server as a bunch of files on disk, in the latter they are thinking of it as a bunch of tables to access to server data. They have become an accidental DBA and the expectation that has been placed on them is, quite simply, just not fair.

Databases are incredibly complex beasties, and they shouldn’t be treated as a bunch of tables, or a bunch of files. They are databases, residing on database servers and the skillset required to properly administer them is very specific and quite hard to define. But in this case I’m going to carve off the first 3 things to master if you find yourself lumped with the DBA tasks with one of those lovely little throwaway lines like “Hey, seeing as you have sysadmin, can you just make sure the sql servers are okay.” If you accidentally don’t say no to that question it will be the first of many questions that start with “Can you just….” Because everything about databases SEEMS like it should be quite simple to someone who doesn’t know how they work. Worse still it may seem quite simple to you too meaning you can do incredible damage very quickly if you are not fully aware of the implications of the actions you’ll be taking.

Number One: Backups

Okay… so when you said “I guess” to that you just made yourself responsible for the availability of data within your organisation. And you have to do that around your real job? Here’s the first thing that can go wrong – someone just accidentally overwrote the database that controls all the company orders – you are about to get one of those “Can you just?” questions. “Can you just restore that back to this morning at 10:36?”

Well can you?

The first skill you need to master is setting up SQL backups. That’s so much more than just running a backup command. You are not just creating a rollback point prior to a deployment, you now need to understand what the business expectations are around recovering data and how to implement them. I’ve already dedicated a bunch of posts on this blog to how you go about doing this, but I’ve barely scratched the surface. The key bits:

- Get someone to agree to the requirements.

- Get the disk and archive resource available to meet the requirements.

- Implement a plan that makes sense and meets the requirements.

- Test your restores.

Which of those is the most important? The next one on the list. You need to have done ALL of these before you should feel comfortable with your ability to restore data in your environment.

Number Two: Monitoring

What do you do if one of those backups you just spent 3 months getting everything in place for fails? Do you even know it happened?

SQL Server has a wonderful selection of built in tools to help you out. You want to make sure if something goes wrong you know about it. Here’s a selection of SQL components you may want to look into to make that happen.

- Database Mail – this allows you to send emails direct from the server. These can be set up on job failure, fired from alerts, set up as steps in maintenance plans or dropped directly into TSQL code.

- SQL Alerts – These can be configured as triggers on certain error conditions, and then set up to perform a response action – for instance kicking off a SQL agent job or sending a Database Mail message.

- Maintenance Plans – These often get a bad wrap in the SQL community, because they are quite limited in the options available for the main tasks they were designed for(SQL Maintenance – Backups, Optimisation of Indexes and Database Consistency Checks). But they are actually incredibly powerful because of the logic flow built into them. You have the ability to trigger an action, and depending on if it succeeds or fails fire off a different response. A series of maintenance plans consisting of TSQL tasks can be an incredibly powerful addition to your toolbelt.

Now rolling your own monitoring can be a time consuming process and somewhat prone to error. My advise is never set up alerts that come to yourself. Set them up to go to a generic address like DBAalerts@yourcompany.com. It’s fine to have that simply forward everything on to you, but you want to be able to let whoever takes over from you to simply be able to start picking up alerts, not have to go through and manually change everything you have set up.

Number Three: Automation

I’ve already talked about a number of processes and tools that you can set up once and then only need to revisit if something goes wrong. The old fashioned DBA would log into each server and work through a checklist of tasks, and that just sucks. One of the things you will find very quickly as an Accidental DBA is once people know you are the one who can make things happen on the SQL server you will be hit from all sides with all sorts of requests. Your time will be too scarce and valuable to waste on something you could have scripted once and set to run on an appropriate schedule.

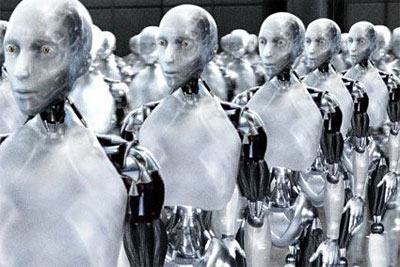

Learn the power of SQL Agent for scheduling tasks. Add powershell and command line tools to take care of the associated operating system tasks and make your life easier by overseeing an army of robots set up to complete your tasks and make you look good.

Number Four: Meet the Community

SQL Server has, without doubt, the most motivated and engaged tech community I have every been involved with. Go and meet them. Most reasonably sized cities have a SQL user group. Microsoft has regular events in large cities. But even if you can’t engage in these in person, the online community is vibrant and full of people who are willing and able to help.

Sometimes that help is in the form of answering questions(I think you are more likely to get answers quickly to SQL Server questions than most any other technology I have been engaged with), sometimes it is detailed blogposts\instructions on how to perform more complex tasks, and sometimes it is the perfect community tool for the task you are working on. If you haven’t already go and check out sp_whoisactive, Ola’s awesome maintenance scripts or DBATools. Every one of these is commercial grade brilliant and they are all available, along with a community that will tell you how to use them….for free!